ECE 542 (Neural Networks) Project 2

Oct 2020 - Nov 2020 — Raleigh, North Carolina

Overview:

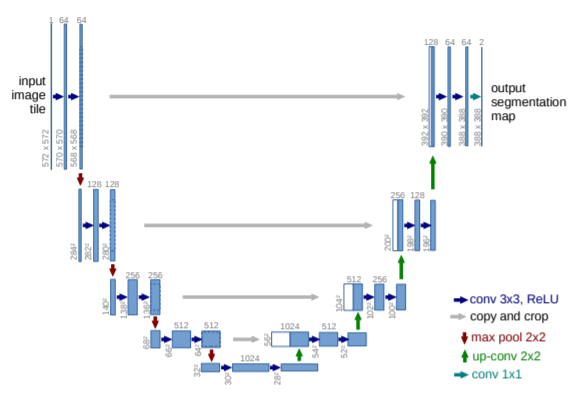

The goal of this project was to process aerial-view images of crops from NCSUs agricultural department, and use a deep learning model to process and use semantic segmentation in classifying areas of crop damage. The metric used for performance evaluation of the model was a mean IOU of the prediction within the validation set. This was a project for ECE 542.

Shown above: example prediction and U-Net architecture used for performing semantic segmentation.

My Contribution:

Group Project (3 members)

I worked with the same group as I did in Terrain Identification using Sensory Prosthetic Limb Timeseries Data.

The training images were extremely high resolution, so I made a MATLAB script to downsize all aerial images as well as their respective crop-damage annotations to various sizes. I created multiple U-Net architectures in Keras to perform this semantic segmentation and trained a couple models for each resolution. As expected, models trained on the highest resolution photographs as well as the lowest resolution resamples performed the worst, since there is an expected trade-off between model speed/size and resolution/detail of the photographs. I also created a script to extract intermittent layers of the model to understand why and how the model was working.

Similar to Terrain Identification using Sensory Prosthetic Limb Timeseries Data, I unfortunately did a disproportionate amount of the work. Not as bad this time, but I would say I did ~70%.

Literature:

Proposal:

Voros_Arpad_ECE542_Proj2Proposal.pdfReport:

Voros_Arpad_ECE542_Proj2.pdf